Project Overview

Phishing is one of the most widespread and damaging tactics used by attackers, responsible for a large share of security breaches worldwide. By disguising malicious emails as legitimate communication, attackers trick users into clicking harmful links, sharing credentials, or downloading malware. Its persistence and its effectiveness makes detection of phishing a critical skill for cybersecurity professionals.

In the following project, I use a Python program to aid in the phishing analysis of .eml files, which are commonly used to store raw email data including headers, body text, and embedded links. The objective of this tool is to provide an effective way to detect phishing indicators by combining rule‑based keyword and URL analysis with external threat intelligence from the VirusTotal API.

Python Program

import os

import re

import json

import base64

import argparse

from email import policy

from email.parser import BytesParser

from email.header import decode_header, make_header

from email.utils import parseaddr

from bs4 import BeautifulSoup

import tldextract

import requests

import os

# --------------------------

# Config

# --------------------------

SUSPICIOUS_KEYWORDS = [

"verify your account", "urgent", "update your password", "click here",

"login now", "security alert", "password expired", "confirm your identity",

"unusual activity", "restricted", "suspended", "invoice attached"

]

SUSPICIOUS_EXTENSIONS = [

".exe", ".scr", ".js", ".vbs", ".bat", ".cmd", ".ps1", ".hta", ".lnk", ".jar",

".docm", ".xlsm", ".zip", ".rar", ".7z", ".iso"

]

KNOWN_GOOD_DOMAINS = {

"microsoft.com", "google.com", "apple.com", "amazon.com", "outlook.com",

"workday.com", "adp.com", "wellsfargo.com", "chase.com", "bankofamerica.com", "paypal.com"

}

HIGH_RISK_BRANDS = [

"paypal", "microsoft", "google", "apple", "amazon", "netflix", "bankofamerica",

"wellsfargo", "chase", "office", "onedrive", "outlook", "teams", "instagram",

"facebook", "tiktok", "coinbase", "binance", "adp", "workday"

]

# How many "points" are added to phishing score

WEIGHTS = {

"display_name_mismatch": 2,

"replyto_mismatch": 2,

"keyword": 1,

"suspicious_attachment": 3,

"suspicious_url": 3,

"link_mismatch_or_obfuscation": 2,

"vt_malicious": 4,

"vt_suspicious": 2,

}

# Given total points, it determines if its likely phishing or if it needs review

THRESHOLDS = {

"likely_phish": 6,

"needs_review": 3

}

# --------------------------

# Helpers

# --------------------------

# Decodes email headers (like Subject) that may be encoded

def safe_decode(value):

if not value:

return ""

try:

return str(make_header(decode_header(value)))

except Exception:

return value

#Pulls out plain text and HTML body from email

def extract_text_and_html(msg):

text = ""

html = ""

if msg.is_multipart():

for part in msg.walk():

ctype = part.get_content_type()

if ctype == "text/plain":

try:

text += part.get_content()

except Exception:

pass

elif ctype == "text/html":

try:

html += part.get_content()

except Exception:

pass

else:

ctype = msg.get_content_type()

if ctype == "text/plain":

text = msg.get_content()

elif ctype == "text/html":

html = msg.get_content()

# Fallback: derive text from HTML if no plain text

if not text and html:

soup = BeautifulSoup(html, "lxml")

text = soup.get_text("\n")

return text, html

# Finds all URLs in the text and HTML

def extract_urls(text, html):

urls = set()

# Plain text URLs

for u in re.findall(r'(https?://[^\s"<>\)]+)', text, re.IGNORECASE):

urls.add(u.strip(").,;\"'"))

# HTML URLs

if html:

soup = BeautifulSoup(html, "lxml")

for a in soup.find_all("a", href=True):

urls.add(a["href"])

for tag in soup.find_all(src=True):

urls.add(tag["src"])

return list(urls)

# Uses tldrextract to get the domain from URL

def domain_from_url(url):

ext = tldextract.extract(url)

if ext.suffix:

return f"{ext.domain}.{ext.suffix}".lower()

return ext.domain.lower()

# Measures how similar two words are (used for typosquatting)

def levenshtein(a, b):

if a == b:

return 0

if not a:

return len(b)

if not b:

return len(a)

prev = list(range(len(b) + 1))

for i, ca in enumerate(a, 1):

cur = [i]

for j, cb in enumerate(b, 1):

cost = 0 if ca == cb else 1

cur.append(min(prev[j] + 1, cur[j-1] + 1, prev[j-1] + cost))

prev = cur

return prev[-1]

# Checks if the domain is a look-alike if a high-risk brand

def looks_like_typosquat(domain):

ext = tldextract.extract(domain)

base = ext.domain.lower()

full = f"{ext.domain}.{ext.suffix}".lower() if ext.suffix else base

if full in KNOWN_GOOD_DOMAINS:

return False, None

# digit/homoglyph hints

if re.search(r'[10oOIl]', base):

for b in HIGH_RISK_BRANDS:

if levenshtein(base, b) <= 2:

return True, b

# very close match

for b in HIGH_RISK_BRANDS:

if base != b and levenshtein(base, b) <= 1:

return True, b

return False, None

# --------------------------

# Checks

# --------------------------

# Looks for cases where the display name says "Microsoft (or other brand) but the emaii domain isn't Microsoft (or other brand)"

def check_display_name_spoof(from_header):

score, indicators = 0, []

name, addr = parseaddr(from_header or "")

dom = addr.split("@")[-1].lower() if addr else ""

if name and any(b in name.lower() for b in HIGH_RISK_BRANDS):

if dom and dom not in KNOWN_GOOD_DOMAINS:

score += WEIGHTS["display_name_mismatch"]

indicators.append(f"Display-name spoofing: {name} <{addr}>")

# Freemail with corporate-sounding name

if dom and any(dom.endswith(f) for f in ["gmail.com", "outlook.com", "yahoo.com"]):

if re.search(r"(support|billing|payroll|hr|helpdesk|security|admin)", (name or ""), re.I):

score += WEIGHTS["display_name_mismatch"]

indicators.append(f"Corporate-sounding display name on freemail: {name} <{addr}>")

return score, indicators

# Flags if the "Reply-To" domain is different from the "From" domain

def check_replyto_mismatch(from_header, replyto_header):

score, indicators = 0, []

_, faddr = parseaddr(from_header or "")

_, raddr = parseaddr(replyto_header or "")

if faddr and raddr:

fdom = faddr.split("@")[-1].lower()

rdom = raddr.split("@")[-1].lower()

if fdom != rdom:

score += WEIGHTS["replyto_mismatch"]

indicators.append(f"Reply-To domain differs from From domain: {fdom} -> {rdom}")

return score, indicators

# Searches the body for phishing keywords

def check_keywords(body_text):

score, indicators = 0, []

lower = (body_text or "").lower()

for kw in SUSPICIOUS_KEYWORDS:

if kw in lower:

score += WEIGHTS["keyword"]

indicators.append(f"Phishing language: '{kw}'")

return score, indicators

# Looks for HTML tricks: link text that doesn't match the actual link, Links using Javascript or data: schemes, and Hidden text via CSS

def analyze_html_for_tricks(html):

score, indicators = 0, []

urls_from_html = []

if not html:

return score, indicators, urls_from_html

soup = BeautifulSoup(html, "lxml")

# Link text vs href mismatches, data/javascript schemes, invisible content

for a in soup.find_all("a", href=True):

href = a["href"].strip()

text = a.get_text(strip=True)

if href.lower().startswith(("javascript:", "data:")):

score += WEIGHTS["link_mismatch_or_obfuscation"]

indicators.append(f"Suspicious link scheme: {href[:30]}...")

if text and "@" in text and href.lower().startswith("http"):

score += WEIGHTS["link_mismatch_or_obfuscation"]

indicators.append("Visible email text links to http URL")

# displayed domain vs actual

if text:

disp = re.findall(r'([a-z0-9\-]+\.[a-z\.]{2,})', text.lower())

real = domain_from_url(href) if href.startswith("http") else None

if real and disp and all(d not in real for d in disp):

score += WEIGHTS["link_mismatch_or_obfuscation"]

indicators.append(f"Displayed URL differs from destination: '{text}' -> {href}")

if href.startswith(("http://", "https://")):

urls_from_html.append(href)

# Hidden content via CSS

for tag in soup.find_all(True):

styles = tag.get("style", "")

if re.search(r"display\s*:\s*none|visibility\s*:\s*hidden", styles, re.I):

score += WEIGHTS["link_mismatch_or_obfuscation"]

indicators.append("Hidden content via CSS")

return score, indicators, urls_from_html

# Flags suspicious domains, raw IP addresses, or heavily encoded URLs

def check_urls(urls):

score, indicators, suspicious_urls = 0, [], []

for url in urls:

dom = domain_from_url(url)

sus, brand = looks_like_typosquat(dom)

if sus:

score += WEIGHTS["suspicious_url"]

indicators.append(f"Typosquatting suspected '{dom}' (brand: {brand})")

suspicious_urls.append(url)

if re.match(r"^https?://\d{1,3}(\.\d{1,3}){3}", url):

score += WEIGHTS["suspicious_url"]

indicators.append(f"URL uses raw IP: {url}")

suspicious_urls.append(url)

if url.count("%") > 5 or "@" in url:

score += WEIGHTS["suspicious_url"]

indicators.append(f"Obfuscated/unusual URL: {url}")

suspicious_urls.append(url)

return score, indicators, suspicious_urls

# Flags dangerous attachment types

def check_attachments(msg):

score, indicators = 0, []

for part in msg.iter_attachments():

filename = part.get_filename()

if filename and any(filename.lower().endswith(ext) for ext in SUSPICIOUS_EXTENSIONS):

score += WEIGHTS["suspicious_attachment"]

indicators.append(f"Dangerous attachment: {filename}")

return score, indicators

# Uses VirusTotal API key to check if any URLs are known malicious

def vt_url_reputation(urls, api_key):

score, indicators = 0, []

if not api_key or not urls:

return score, indicators

session = requests.Session()

session.headers.update({"x-apikey": api_key})

for url in urls:

try:

url_id = base64.urlsafe_b64encode(url.encode()).decode().strip("=")

r = session.get(f"https://www.virustotal.com/api/v3/urls/{url_id}", timeout=10)

if r.status_code == 404:

session.post("https://www.virustotal.com/api/v3/urls", data={"url": url}, timeout=10)

continue

if not r.ok:

continue

data = r.json().get("data", {}).get("attributes", {})

stats = data.get("last_analysis_stats", {})

if stats.get("malicious", 0) >= 1:

score += WEIGHTS["vt_malicious"]

indicators.append(f"VirusTotal: malicious for {url} ({stats.get('malicious')})")

elif stats.get("suspicious", 0) >= 1:

score += WEIGHTS["vt_suspicious"]

indicators.append(f"VirusTotal: suspicious for {url} ({stats.get('suspicious')})")

except Exception:

continue

return score, indicators

# --------------------------------------------------------------

# Analyzer

#

# Opens the .eml file and parses it into a python email object.

# Extracts:

# Subject, From, Reply-T0, Date, Body text and HTML, and URLs

# Runs all the detection checks above

# Adds up the score from all checks

# Decides the verdict:

# Score >= 6 --> "Likely Phishing"

# Score >= 3 --> "Needs Review"

# Score < 3 --> "Probably Safe"

# --------------------------------------------------------------

def analyze_eml(path, use_vt=True, verbose=False):

with open(path, "rb") as f:

raw = f.read()

msg = BytesParser(policy=policy.default).parsebytes(raw)

subject = safe_decode(msg["Subject"])

from_h = safe_decode(msg["From"])

replyto_h = safe_decode(msg["Reply-To"])

date_h = safe_decode(msg["Date"])

text, html = extract_text_and_html(msg)

urls = extract_urls(text, html)

total, ind = 0, []

s, i = check_display_name_spoof(from_h); total += s; ind += i

s, i = check_replyto_mismatch(from_h, replyto_h); total += s; ind += i

s, i = check_keywords(text); total += s; ind += i

s, i = check_attachments(msg); total += s; ind += i

s, i, html_urls = analyze_html_for_tricks(html); total += s; ind += i

urls = sorted(set(urls + html_urls))

s, i, sus_urls = check_urls(urls); total += s; ind += i

vt_key = os.environ.get("VT_API_KEY")

if use_vt and vt_key:

s, i = vt_url_reputation(urls, vt_key); total += s; ind += i

verdict = "Likely Phishing" if total >= THRESHOLDS["likely_phish"] else "Needs Review" if total >= THRESHOLDS["needs_review"] else "Probably Safe"

result = {

"subject": subject,

"from": from_h,

"reply_to": replyto_h,

"date": date_h,

"score": int(total),

"verdict": verdict,

"indicators": ind,

"urls": urls,

"suspicious_urls": sus_urls

}

if verbose:

result["body_preview"] = (text[:400] + "...") if text else ""

result["html_present"] = bool(html)

result["attachment_count"] = sum(1 for _ in msg.iter_attachments())

return result

# --------------------------

# CLI

# --------------------------

def main():

ap = argparse.ArgumentParser(description="Phishing analyzer (rule-based with optional VirusTotal URL reputation).")

ap.add_argument("eml", help="Path to .eml file")

ap.add_argument("--json", help="Write JSON report to file")

ap.add_argument("--no-vt", action="store_true", help="Disable VirusTotal URL checks")

ap.add_argument("--verbose", action="store_true", help="Extra details in output")

args = ap.parse_args()

res = analyze_eml(args.eml, use_vt=not args.no_vt, verbose=args.verbose)

# Human-readable

print(f"Subject: {res['subject']}")

print(f"From: {res['from']}")

if res.get("reply_to"):

print(f"Reply-To: {res['reply_to']}")

if res.get("date"):

print(f"Date: {res['date']}")

print(f"Score: {res['score']}")

print(f"Verdict: {res['verdict']}")

print("Indicators:")

for i in res["indicators"]:

print(f" - {i}")

if res["urls"]:

print("URLs found:")

for u in res["urls"]:

print(f" - {u}")

# JSON artifact

if args.json:

with open(args.json, "w", encoding="utf-8") as f:

json.dump(res, f, indent=2, ensure_ascii=False)

if __name__ == "__main__":

main()How The Program Works

The script parses .eml files to extract headers, body content, attachments, and embedded URLs. It then applies a series of detection rules to identify common phishing tactics, such as display name spoofing, suspicious reply-to addresses, phishing-related keywords, dangerous attachment types, and obfuscated or typosquatted URLs. The analyzer also inspects HTML content for deceptive links and hidden elements. If a VirusTotal API key is provided, the tool checks URLs against the VirusTotal database for known malicious or suspicious activity. Each finding contributes to an overall phishing risk score, which is used to classify the email as "Likely Phishing," "Needs Review," or "Probably Safe."

For example, attackers are known to spoof domains, display names, and create a sense of urgency in order to steal credentials.

Sample Email:

From: PayPal Support

<support@paypa1.com>

Subject: Urgent: Verify your account to avoid suspension

Reply-To:support@paypa1.com

Dear Customer,

We have detected unusual activity on your account. Click here to verify your account.

Thank you,

PayPal Security Team

In the example email above you can see the "from" and "reply-to" emails both end in "paypa1.com", which appears similar to the authentic "paypal.com". This tool is designed to catch this kind of malicious email spoofing by using a list of common high-risk brands (PayPal, Amazon, Wells Fargo, Chase, etc.) and comparing the similarity to the sender's domain. The attacker may also try to get the user to download malicious software. The tool looks for any attachments and more specifically inspects which file types are attached. If the file type has a suspicious extension, such as (.exe, .scr, .bat, .js, .jar, etc) it will flag it as suspicious and add to the overall risk score.

The tool also takes into consideration false positives by weighting risk. For example, in the program the following weights are assigned:

Display name mismatch: 2

Reply-to mismatch: 2

Keyword: 1

Suspicious Attachment: 3

Suspicious URL: 3

Link mismatch: 2

Virus Total (Malicious): 4

Virus Total (Suspicious): 2

Each indicator that is present is added and the total score is the deciding factor whether the email is "likely phishing" (6), "needs review" (3), "probably safe" (<3). By weighting the scores this way, it reduces the likelihood of a false positive. For example, if an email was sent by the authentic "paypal.com" saying a suspicious phrase such as "update your password" it would be marked as a 1 on the risk scale. This shows a level of risk associated with this email, but because there were no other risk factors associated, it is safe to assume the email is likely safe.

Results

Analysis 1

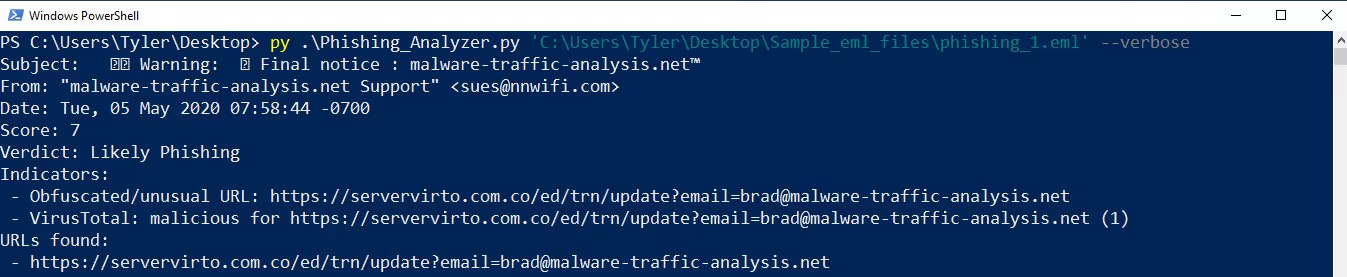

To test the validity of the program, I downloaded sample .eml files that are known to be malicious from malware-traffic-analysis.net against an email i sent, which I know is safe to see how the tool would perform.

Important! When downloading malware or sample data for analysis it is advised to only work with in a sandboxed environment such as a VM, to protect your system and network.

After the sample files were downloaded, I opened powershell and ran the following script using

py <path\to\program> <path\to\file>

py .\Phishing_Analyzer.py 'C:\Users\Tyler\Desktop\Sample_eml_files\phishing_1.eml'

In the results, we see the date, risk score, verdict, indicators, and URLs contained within this email. The scanner labeled this as "Likely Phishing" due to its risk score of 7. Based on the indicators and weighted scores we see the program took the all of the indicators and added their scores together to come up with the verdict.

Obfuscated/Unusual URL (Score: 3)

VirusTotal lookup verdict "Malicious" (Score: 4)

Total Risk Score: 7

One interesting detail to note from this particular .eml analysis is we can see how the attacker intended to trick the user. The URL found "https://servervirto.com.co/ed/trn/update?email=brad@malware-traffic-analysis.net" is inconspicuous at first glance, but has a .co after the .com, likely intended to spoof servervirto.com. The attacker could have used this to steal credentials, or potential lead the user to a malicious site containing malware, to further compromise the users system.

Analysis 2

To continue testing the validity of the tool, I use another sample .eml file downloaded from malware-traffic-analysis.net.

After running the script, the analyzer gave this email a score of 11 and concluded it is likely Phishing.

Looking at the indicators detected we see the attacker tried to trick the user in a couple of ways. First, the analyzer took note that the text links contained within the email, lead to an HTTP URL not an HTTPS. In doing so, the attacker can trick the user into using an insecure channel of communication as HTTP does not use SSLcryption that HTTPS offers. Meaning any data packets sent over this site could be intercepted by the attacker, which could contain sensitive information such as username/passwords, session tokens, or banking information. Next,the analyzer determined the attacker not only obfuscated the URL by adding .af after the .edu extension attempting to spoof the domain, they also have the displayed URL redirect to a different link.

Lastly, The analyzer ran this through the VirusTotal API which flagged this domain as malicious. All of these factors, indicate it is safe to say this email is likely malicious.

Analysis 3

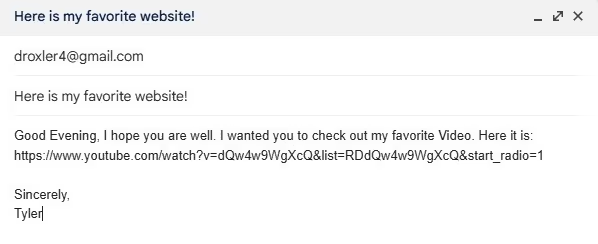

Now that we have seen the analyzer determine indicators of malicious activity, we need to check how it does in deciding safe emails, as false positives can occur. To test this, I sent myself an email that I know is safe.

As you can see, I pasted a YouTube link in the body of the email, but did not hyperlink it, rather it is written in plain text. The analyzer should detect this as a red flag even though the link itself is safe. This is to catch attackers who try to paste malicious links in plain text attempting to bypass spam filtering.

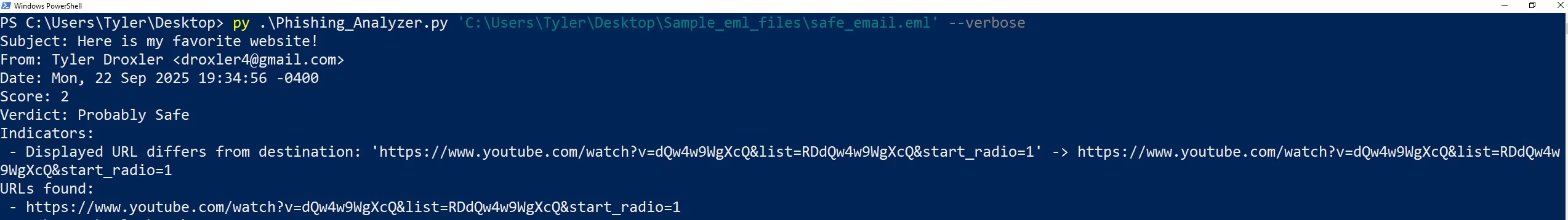

After downloading the .eml file and running it through our script we get the following results:

As you see the analyzer indicated the issue previously mentioned and gave this email a score of 2. Since, this is less than 3 it was given a verdict of "Probably Safe" this is a great example of why weighting the indicators is essential. If the scores weren't weighted the analyzer would detect this red flag and mark it as Phishing. By weighting the scores we can address the issue of false positives, because the email will only be labeled as phishing if there are multiple indicators or if the indicator is a MAJOR red flag automatically putting it over the threshold.

Since the analyzer determined the link is safe, feel free to check it out: https://www.youtube.com/watch?v=dQw4w9WgXcQ&list=RDdQw4w9WgXcQ&start_radio=1

Lessons Learned

Overall, this project showcases just how useful scripting and working alongside automation tools can help analysts in their investigations, as well as protect ordinary users. The tool can detect malicious activity that may have otherwise been overlooked. It also shows how phishing analyzing web extensions play a significant role in protecting users who are untrained. Phishing is the most common method attackers use to target individuals and organizations because humans are always the most vulnerable asset to an organization. People get tens, hundreds, even thousands of emails a month so statistically with enough time it is likely someone could be caught off guard by a well crafted phishing email and click a malicious link which can compromise their personal data leading to financial, reputational, or organizational damage. One click could result in millions of damage or data loss to an organization.

%201.svg)